Unraveling the Magic: Understanding Inference in Generative AI

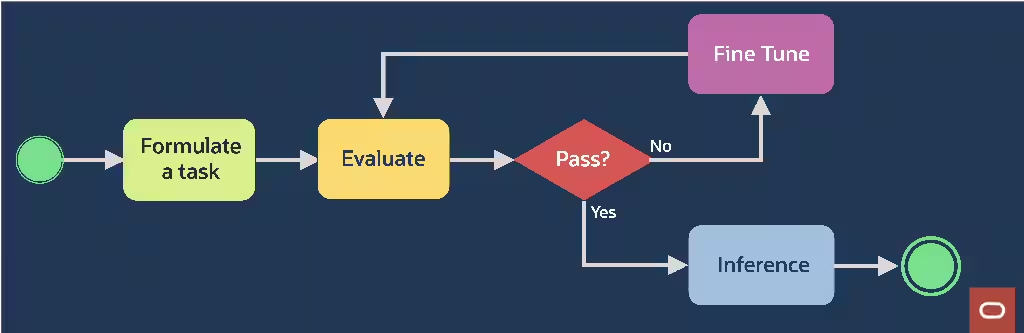

In the context of generative AI, “inference” refers to the process by which the AI model generates output based on the input it receives.

Generative AI models, such as language models or image generators, work by learning patterns and relationships within a dataset during the training phase. Once trained, these models can generate new data that is similar to the examples they were trained on.

During the inference phase, the trained model takes an input (which could be text, an image, or some other form of data) and generates an output based on its learned patterns. For example, in the case of a text generation model like GPT, given a prompt, the model generates a continuation of that prompt, producing coherent and contextually relevant text.

Let’s Understand it an easy way

Imagine you have a magical machine that can write stories. You feed it examples of stories you like, and it learns from them. Once it’s learned enough, you can give it a starting sentence, and it will continue the story for you.

The process of “inference” in generative AI is like asking your magical story-writing machine to continue a story based on a sentence you give it. The machine looks at the sentence, thinks about all the stories it’s learned before, and then comes up with the next part of the story.

So, in simpler terms, “inference” is just asking the AI to use what it’s learned to generate something new based on what you give it. It’s like using a magic storytelling machine to create new stories for you!