Breaking free from sequential processing: how Transformer architecture revolutionized NLP.

In the landscape of machine learning, prior to the advent of Transformer architecture, recurrent neural networks (RNNs) and convolutional neural networks (CNNs) were widely used for sequence modeling tasks, such as language translation and text generation. However, these models faced limitations in capturing long-range dependencies and suffered from computational inefficiency when processing sequences of variable lengths.

The Problem with Previous Approaches:

Traditional approaches like RNNs struggled with vanishing gradients and difficulty in capturing long-range dependencies due to sequential processing. CNNs, on the other hand, were limited by fixed-length contexts and lacked the ability to model inter-token relationships effectively.

Genesis of Transformer Architecture:

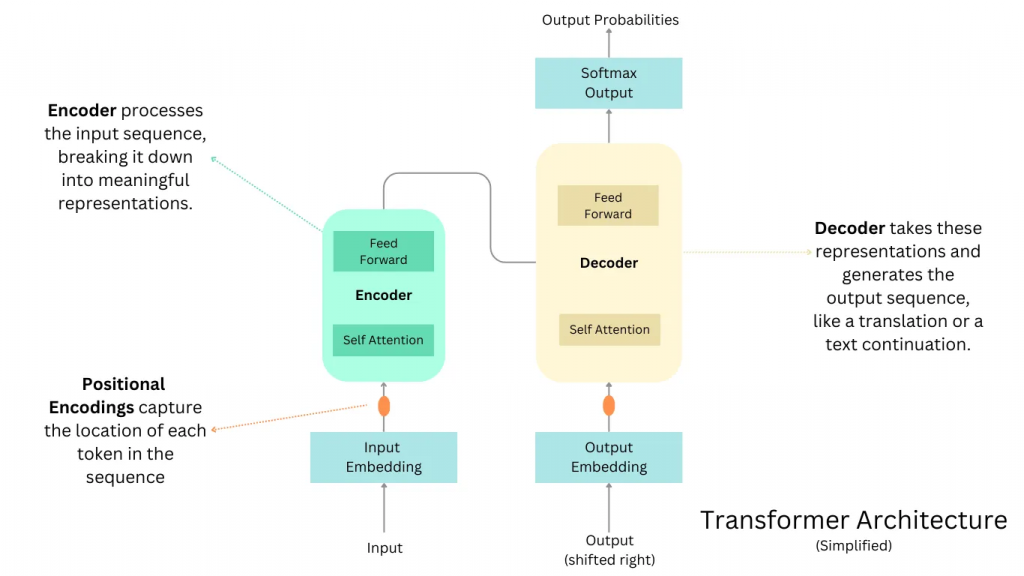

The idea of Transformer architecture was proposed by Vaswani et al. in their groundbreaking paper in 2017 titled “Attention is All You Need.” The architecture was conceived as a solution to the limitations of traditional sequence models by leveraging self-attention mechanisms.

First Implementation:

The first implementation of the Transformer architecture was presented in the paper by Vaswani et al. It introduced the concept of self-attention and multi-head attention mechanisms, laying the foundation for modern Transformer-based models.

How Transformer Architecture Addressed Modern-Day Problems:

Transformer architecture addressed modern-day problems by introducing self-attention mechanisms, enabling the model to capture dependencies across the entire sequence simultaneously. This allowed for more efficient processing of long-range dependencies and improved contextual understanding without being constrained by fixed-length contexts.

Latest Advancements:

Since its inception, Transformer architecture has seen numerous advancements and extensions. Notable developments include models like BERT, GPT, and T5, which have pushed the boundaries of natural language processing. These models have achieved state-of-the-art performance in tasks such as language understanding, text generation, and question answering.

Best Examples of Transformer Applications:

Transformer architecture has been successfully applied in various applications, including machine translation, sentiment analysis, language understanding, text summarization, and more. One of the best examples of Transformer architecture in action is the BERT model, which has demonstrated exceptional performance in tasks such as question answering and natural language inference.

Types of Transformers and When to Use Which One:

- BERT (Bidirectional Encoder Representations from Transformers):

- Use Case: BERT is well-suited for tasks requiring bidirectional context understanding, such as natural language understanding, sentiment analysis, and named entity recognition.

- Advantages: BERT can capture contextual information from both left and right contexts, making it effective for tasks where understanding the full context is crucial.

- Example Application: BERT has been widely used for tasks such as question answering, text classification, and language inference.

- GPT (Generative Pre-trained Transformer):

- Use Case: GPT is ideal for tasks involving text generation and completion, such as dialogue generation, language modeling, and text summarization.

- Advantages: GPT generates coherent and contextually relevant text by leveraging the transformer architecture’s self-attention mechanism.

- Example Application: GPT has been successfully applied in generating human-like text, writing poetry, and creating dialogue systems.

- T5 (Text-To-Text Transfer Transformer):

- Use Case: T5 is a versatile model that can be adapted to various tasks by framing them as text-to-text transformations, including translation, summarization, and question answering.

- Advantages: T5 provides a unified framework for different NLP tasks, enabling efficient training and fine-tuning on diverse datasets.

- Example Application: T5 has been used for tasks such as language translation, text summarization, and information retrieval.

- Transformer-XL:

- Use Case: Transformer-XL addresses the limitations of traditional transformers in handling long sequences by introducing recurrence mechanisms, making it suitable for tasks requiring processing of long-range dependencies.

- Advantages: Transformer-XL can efficiently model dependencies across longer sequences, making it effective for tasks such as document-level language modeling and text generation.

- Example Application: Transformer-XL has been applied in tasks like document summarization, language modeling, and conversation modeling.

- XLNet:

- Use Case: XLNet improves upon BERT by addressing the limitations of sequential processing through permutation-based training, making it suitable for tasks requiring fine-grained understanding of context.

- Advantages: XLNet leverages permutations of input sequences during training, enabling the model to capture bidirectional context without relying on masked language modeling.

- Example Application: XLNet has been used for tasks such as language understanding, text classification, and document ranking.

Choosing the right type of transformer depends on the specific requirements of the task at hand. By understanding the strengths and weaknesses of each type, practitioners can select the most appropriate model architecture and achieve optimal performance in their applications.