Docker vs. Virtual Machines: A Deep Dive into Hypervisor, Container Hosting, and Scaling for Multiple Projects

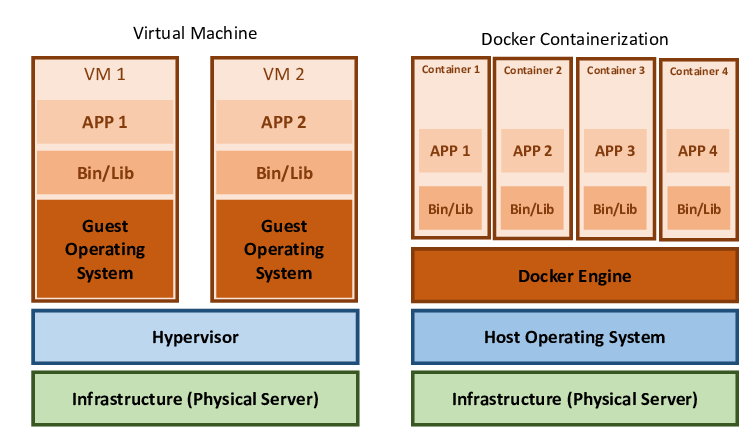

Docker and virtual machines (VMs) are both powerful technologies that allow developers to create isolated environments. However, they operate in fundamentally different ways. Docker, with its container-based architecture, has revolutionized development and scaling, offering a more efficient, flexible alternative to virtual machines. A key difference lies in how they utilize system resources and manage multiple projects.

This article explores how Docker handles multiple containers for different projects, the role of hypervisors in virtual machines, and how these differences impact development and scaling.

The Role of Hypervisor in Virtual Machines

A hypervisor, also known as a virtual machine monitor (VMM), is the software that enables the creation and management of virtual machines. It sits between the host system’s hardware and the virtual machines, managing the distribution of hardware resources (CPU, memory, storage) to the VMs.

There are two types of hypervisors:

- Type 1 Hypervisor (Bare Metal) – Runs directly on the host’s hardware (e.g., VMware ESXi, Microsoft Hyper-V).

- Type 2 Hypervisor (Hosted) – Runs on a host operating system (e.g., VirtualBox, VMware Workstation).

Each VM created through the hypervisor requires its own guest operating system (OS), which introduces substantial overhead in terms of resource consumption. The hypervisor allocates a fixed amount of CPU, memory, and storage to each VM, and these resources are isolated from other VMs.

Docker: Hosting Multiple Containers for Multiple Projects

Docker, on the other hand, eliminates the need for a hypervisor. Instead of virtualizing hardware, Docker uses containerization to virtualize the operating system. Docker containers share the host OS kernel, and each container runs its own isolated process space. This results in much lighter resource usage compared to VMs.

With Docker, multiple containers can be hosted under the same Docker engine. This means you can run multiple containers for different projects on a single machine, with each container acting as a standalone environment. Docker provides tools like Docker Compose to manage multiple containers in a single project, allowing for seamless orchestration of different services (such as databases, web servers, etc.) in different containers.

How Docker Hosts Multiple Containers for Different Projects:

- Container Isolation: While containers share the same OS kernel, each container is isolated in terms of process space, networking, and file systems. This allows you to run containers for different projects on the same Docker engine without conflicts.

- Resource Efficiency: Unlike VMs, Docker containers don’t require full OS installations. They are lightweight and share resources dynamically. You can run hundreds or even thousands of containers on a single host, depending on your system’s resources, without the overhead of managing multiple guest OSs.

- Networking and Inter-Container Communication: Docker allows you to define custom networks between containers, meaning you can easily configure communication between containers of the same project while isolating them from other projects. This makes Docker ideal for microservices, where different services may need to communicate with each other, while maintaining separation from other projects.

Comparing Docker and Virtual Machines for Multi-Project Hosting

- Resource Utilization

VMs: Each virtual machine runs its own full operating system, leading to significant resource consumption. Even if the VM is idle, its allocated resources (memory, CPU) are reserved and cannot be dynamically shared with other VMs. Running multiple VMs for different projects can be resource-intensive and inefficient.Docker: Containers share the host’s OS kernel, making them lightweight. Docker dynamically uses resources based on demand, which means that multiple containers can be run on the same system with much less overhead compared to VMs. This allows for much better utilization of system resources, as containers do not require dedicated resources like VMs.

- Startup Time

VMs: Booting a virtual machine involves loading a full operating system, which can take several minutes. For each new project or environment, a new VM must be spun up, adding significant startup time.Docker: Containers start almost instantly, as they do not need to boot a complete OS. This rapid startup makes it easy to switch between projects or spin up new environments, facilitating faster development cycles and testing.

- Hosting Multiple Projects

VMs: Running multiple VMs for different projects means running multiple OS instances, each isolated from one another. While this offers strong isolation, it is resource-heavy, and the hypervisor adds a layer of complexity in resource allocation and management.Docker: Docker allows you to run multiple containers on the same Docker engine without the overhead of separate OSs. Docker Compose can manage complex multi-container environments, making it easy to develop, test, and deploy multiple projects simultaneously on the same host machine. You can also separate environments (e.g., development, staging, and production) using different container configurations.

- Scalability

VMs: Scaling VMs involves provisioning additional virtual machines, which is slower and more resource-intensive. Each new VM requires its own OS installation and configuration. Additionally, scaling VMs requires careful management of hypervisor resource allocation to ensure that the VMs do not compete for resources, leading to performance degradation.Docker: Scaling Docker containers is fast and efficient. You can simply replicate containers to scale horizontally and manage container scaling with orchestration tools like Kubernetes. Containers can be quickly deployed or removed based on demand, making scaling seamless. Docker’s ability to run microservices in different containers means that individual components of an application can be scaled independently based on load.

- Portability

VMs: Virtual machines are less portable due to the larger size of the VM image (which includes a full OS). Moving VMs between different environments can be cumbersome, and they often need specific hypervisor configurations.Docker: Docker containers are highly portable. A container image can be run on any system that supports Docker, regardless of the underlying infrastructure. This makes it easier to move between development, staging, and production environments or even different cloud providers.

Drawbacks of Virtual Machines Compared to Docker

- Heavy Resource Use: Virtual machines consume more CPU, memory, and storage because they emulate complete hardware and run full OS installations. Running multiple VMs for different projects can strain system resources and lead to inefficiencies.

- Slower Performance: The additional layer introduced by the hypervisor, along with the overhead of running a full guest OS, reduces the performance of virtual machines compared to containers. For applications that require fast iteration or high scalability, Docker offers a significant performance advantage.

- Complexity in Managing Multiple Projects: With VMs, managing multiple environments for different projects involves creating and maintaining multiple VMs, each with its own OS and configuration. This increases complexity and overhead in both management and resource allocation.

Docker and virtual machines both have their place in modern development, but Docker has clear advantages when it comes to resource efficiency, portability, and scalability. The hypervisor in VMs adds significant overhead, as each VM requires its own OS, leading to increased resource consumption and slower startup times. Docker, by contrast, uses a shared OS kernel and isolates environments at the process level, allowing multiple containers to run efficiently under the same Docker engine.

For developers working on multiple projects or scaling microservices-based architectures, Docker offers a more streamlined, efficient approach. Its lightweight nature, rapid startup time, and ability to dynamically share resources make it a powerful tool for modern software development and scaling.