Unlocking Creativity: Exploring Generative AI`s Potential in Content Creation and Beyond

Generative AI refers to a type of artificial intelligence system that is capable of creating new data or content that is similar to, but not identical to, the data it was trained on. This is in contrast to discriminative AI, which focuses on classification tasks such as determining whether an input belongs to one category or another.

Generative AI models, such as Generative Adversarial Networks (GANs) or autoregressive models like OpenAI’s GPT series, are trained on large datasets and learn the underlying patterns and structures within that data. Once trained, they can generate new content, such as images, text, music, or even videos, that exhibits similar characteristics to the training data.

The key idea behind generative AI is its ability to understand and mimic the distribution of the training data, allowing it to produce novel and realistic outputs. This capability has applications in various fields, including art generation, content creation, data augmentation, and even drug discovery.

GPT and similar models belong to the category of autoregressive generative models. They work by predicting the next word or token in a sequence given the preceding context. This enables them to generate coherent and contextually relevant text based on prompts or starting phrases provided by users.

Generative AI encompasses a broad range of models and techniques designed to create new content across various domains, and large language models play a significant role within this field, particularly in generating textual content.

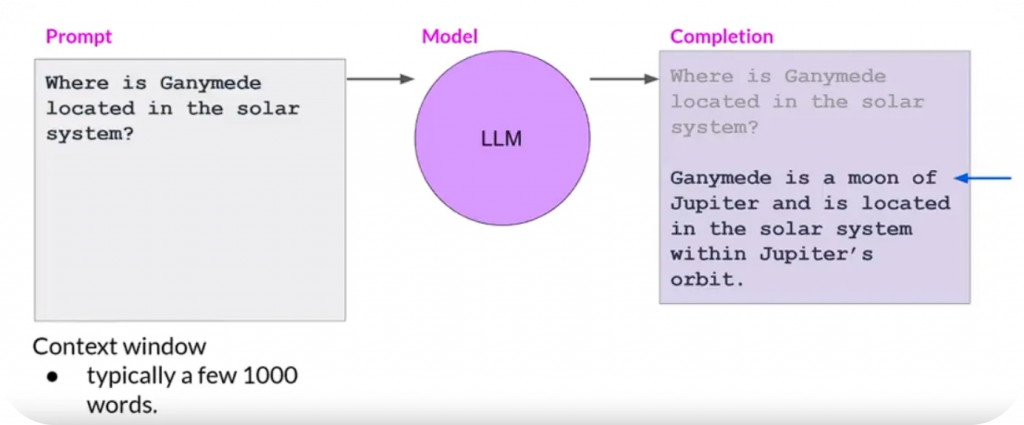

General Diagram of LLM process

Prompt (Prompat): This is like giving instructions or asking a question to our large language model (LLM). It’s what we feed into the system to get a response.

Context Window: Think of this as the space or ‘memory’ where the prompt and relevant information reside. It’s like the LLM’s workspace, capable of holding several hundred to a few thousand words.

LLM Model: This is the powerhouse! It’s the part of the system that has been trained to understand language patterns and generate responses. When we input our prompt and context into the model, it crunches the data and comes up with a response.

Completion: After processing the input (prompt and context), the model generates a response. This response is called a completion. It’s the answer, suggestion, or continuation of text provided by the LLM based on the input it received.

Inference: This is the process of asking a question or inputting a prompt into the model and receiving a completion as a response. So, when we interact with the LLM by asking it questions or giving it prompts, we are essentially engaging in inference.

Prompt and context example during input phase

Input Text / context: “The company is planning to launch a new product in the market. They’ve conducted extensive market research and identified a gap that their product can fill. The target audience is young professionals who are looking for convenient and affordable solutions.”

Prompt: “Given the information provided above, what do you think would be the key factors for the success of the company’s new product?”

In this example, the input text provides background information about a company’s plan to launch a new product, including details about market research and the target audience. The prompt then asks a specific question based on this information, prompting the model to generate a response focusing on the key success factors for the new product.